2022 was a big year for competitive machine learning, with a total prize pool of more than $5m across all platforms.

This report reviews all the interesting things that happened in 2022, in two main parts. In Competitive ML Landscape we briefly review competition activity across each of the platforms, as well prize money and the types of competitions that were run.

In Winning Solutions we focus on the winners of those competitions, and we try to get to the core of what enabled them to win. We look at trends in types of modelling, programming language preferences, cross-validation methods, and other implementation details.

Highlights

- Successful competitors have mostly converged on a common set of tools — Python, PyData, PyTorch, and gradient-boosted decision trees.

- Deep learning still has not replaced gradient-boosted decision trees when it comes to tabular data, though it does often seem to add value when ensembled with boosting methods.

- Transformers continue to dominate in NLP, and start to compete with convolutional neural nets in computer vision.

- Competitions cover a broad range of research areas including computer vision, NLP, tabular data, robotics, time-series analysis, and many others.

- Large ensembles remain common among winners, though single-model solutions do win too.

- There are several active machine learning competition platforms, as well as dozens of purpose-built websites for individual competitions.

- Competitive machine learning continues to grow in popularity, including in academia.

- Around 50% of winners are solo winners; 50% of winners are first-time winners; 30% have won more than once before.

- Some competitors are able to invest significantly into hardware used to train their solutions, though others who use free hardware like Google Colab are also still able to win competitions.

Read on to find out more about platforms and competitions, or skip ahead to look at the technical details behind winning solutions.

Table of contents

Competitive ML Landscape

Notable Competitions and Trends

The largest competition by prize money was DrivenData’s Snowcast Showdown, sponsored by the US Bureau of Reclamation. A $500k prize pool was made available to participants to help improve water supply management by providing accurate snow-water-equivalent estimates for different areas of the Western United States. As always, DrivenData’s excellent meet the winners write-up and detailed solution reports are well worth reading through.

The most popular competition in 2022 was Kaggle’s American Express Default Prediction competition, with over 4,000 teams entering. $100k was awarded in prizes, split across the top four teams, for predicting whether customers would or would not pay back their loans. The first place prize was won by a first-time solo winner, with an ensemble of neural net and LightGBM models.

The largest independent competition was Stanford’s AI Audit Challenge, which offered a $71,000 prize pool for the best “models, solutions, datasets, and tools to improve people’s ability to audit AI systems for illegal discrimination”.

There were three competitions based around financial forecasting, all on Kaggle: JPX’s Tokyo Stock Exchange Prediction, Ubiquant’s Market Prediction, and G-Research’s Crypto Forecasting.

Computer Vision

The biggest class of machine learning competitions in 2022 was, broadly, computer vision problems — with over $1m in total prize money across more than 40 competitions. These include the Snowcast Showdown competition mentioned above, as well as problems like spotting oil slicks in satellite imagery, identifying cervical spine fractures from scans, segmenting functional tissue units in organ biopsies, and counting pests in agricultural images.

Conservation competitions

There were at least four competitions in 2022 which specifically involved building models to identify specific species or individual animals to aid conservation efforts. Kaggle’s Great Barrier Reef Starfish Detection competition asked participants to draw bounding boxes around starfish in underwater videos. DrivenData’s Where’s Whale-do and Kaggle’s Happywhale competition involved identifying individual whales and dolphins from photos. In Zindi’s Turtle Recall: Conservation Challenge, participants built a facial recognition model for turtles.

Medical scans

There were at least five competitions focused on analysing medical or biological imagery: Kaggle’s HuBMAP + HPA, Cervical Spine Fracture Detection, and GI Tract Image Segmentation competitions asked participants to segment medical images — identifying tissue units across organs, localising fractures, or tracking healthy organs. The STRIP AI challenge required classifying digital pathology images based on the type of stroke the patient had had. The NeurIPS Weakly Supervised Cell Segmentation Challenge aimed to benchmark cell segmentation methods that could be applied to various microscopy images across multiple imaging platforms and tissue types.

NLP

The second-biggest category was natural language processing (NLP) competitions, with over $500k in total prize money across more than 14 competitions. Most of the big pure-NLP competitions were on Kaggle, which hosted three NLP-based competitions focused on different aspects of education: segmenting essays, assessing language proficiency, and predicting effective arguments.

NLP + search

There were also some interesting competitions which combined NLP with other types of problems. For example, Amazon ran a three-track competition, hosted by AIcrowd, focused on different aspects of improving search results. This involved ranking products matching a search query, classifying matched products as exact/substitute/complement/irrelevant, and identifying substitute products.

NLP + reinforcement learning

The NeurIPS IGLU Challenge, also hosted on AIcrowd, had an NLP task. The competition focused on Human-AI collaboration through natural language, looking at AI agents’ ability to follow instructions in a collaborative Minecraft-like environment. The NLP task asked the agent, given instructions from a human Architect, to decide whether it has sufficient information to carry out the described task or if further clarification was needed. The agent was scored on both when they asked clarifying questions, and what to ask (from a given list of potential questions).

Sequential Decision-Making

Alongside the success of Reinforcement Learning (RL) in the past decade, with Atari DQN, AlphaGo and other major achievements, a new class of competitive machine learning problems — which we’ll call sequential decision-making problems — has been growing in popularity. As opposed to the typical train-set/test-set supervised learning setup, these problems provide participants with an environment that changes with time, and a set of possible actions that can be taken at a given timestep. This environment could represent, for example, a game world, or a simulation of a power network or transport network. In the case of game worlds, participants can be matched up against each other — like in the MIT Battlecode competition — or tasked with accomplishing certain feats — like building a house in Minecraft in the MineRL BASALT competition.

Kaggle responded to this growth in popularity by introducing Simulation Competitions in 2020, allowing these types of competitions to be run on its platform. AIcrowd has also run a number of these competitions. In 2022 there were over 25 of these interactive competitions with a total prize pool of more than $300k — and they didn’t all run in just a simulated environment!

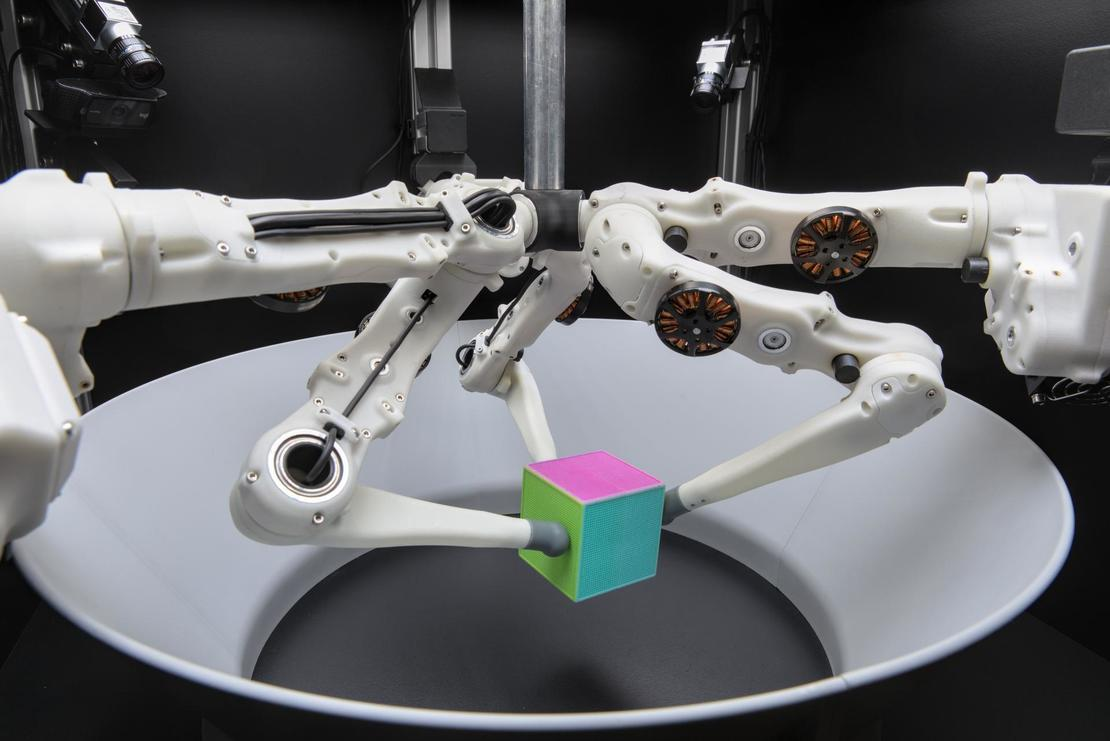

In the Real Robot Challenge, an official NeurIPS 2022 Competition, participants had to learn to control a tri-finger robot to move a cube to a target location or position it at a certain point in space, with the right orientation. Participants’ policies were run weekly on physical robots, and those runs were used to update the leaderboard. $5k in prizes were awarded, alongside the academic kudos of presenting at a NeurIPS Workshop.

Copyright: MPI for Intelligent Systems/Felix Widmaier (source)

Other Types of Competitions

There were many other competitions which don’t quite fit into the vision/NLP/RL buckets described above. Examples include graph learning, optimisation, AutoML, audio processing, security/privacy, meta-learning, causal inference, time-series forecasting, and analysis/visualisation.

Platforms

The competitive machine learning ecosystem is made up of a few large platforms running multiple competitions per year, as well as many special-purpose websites hosting individual competitions. ML Contests sits alongside these competition platforms, improving competition discovery by making it easy to look at ongoing competitions across all platforms in one place.

2022 Platform Comparison

Platforms vary in multiple ways. To give some examples:

- Kaggle, one of the most mature platforms, was acquired by Google in 2017 and has the largest community, having recently reached 10 million users. Running a funded competition on Kaggle can be quite expensive. As well as hosting competitions, Kaggle allows users to host datasets, notebooks, and models.

- CodaLab is an open-source competitions platform, with an instance maintained by Université Paris-Saclay. Anyone can sign up and host or take part in a competition. Free CPU resources are available for inference, and competition organisers can supplement this with their own hardware.

- Zindi is a smaller platform with a very active community, focused on connecting organisations with data scientists in Africa. Zindi also runs in-person hackathons and community events.

- DrivenData focuses on running competitions with social impact, and has run competitions for NASA and other organisations. Competitions are always followed up by in-depth research reports describing the solutions.

- AIcrowd started as a research project at EPFL, and is now one of the top five competition platforms. It has hosted several official NeurIPS competitions.

Overview of competition platforms1

| Platform | Founded | Users | Competitions (2022) | Total prize money (2022) | Typical entries2 |

|---|---|---|---|---|---|

| Kaggle | 2010 | 10m+ | 34 | $1,758,000 | 1200 |

| Tianchi3 | 2014 | ? | 25 | $1,492,120 | 50 |

| CodaLab | 2013 | 120k+ | 234 | $328,400 | 30 |

| Zindi | 2018 | 57k+ | 22 | $102,500 | 180 |

| AIcrowd | 20175 | ~60k | 19 | $258,300 | 30 |

| DrivenData | 2014 | ~100k | 12 | $760,000 | 50 |

| Signate | 20146 | 85k+ | 6 | $72,960 | 130 |

| EvalAI7 | 2017 | 28k+ | 5 | $19,000 | 10 |

| Waymo | 2020 | -8 | 4 | $88,000 | 20 |

| Xeek | 2022 | ~3k | 4 | $55,000 | 20 |

| DS Works | 20219 | ? | 4 | $92,000 | 50 |

| Other10 | - | - | 52 | $484,300 | 10 |

Note: the table above is shown with a reduced set of columns on mobile. For the full table, view this report on a larger screen.

Other Interesting Platforms

We exclude a number of interesting platforms from this report, either because they do not run competitions that fit our criteria11, or because they did not run any in 2022. Briefly:

- Numerai is a crowd-sourced quant fund that has paid out over $44m to data scientists since inception.

- The Makridakis Open Forecasting Center conducts forecasting research and runs leading time-series forecasting competitions. There were no competitions with deadlines in 2022, but the M6 competition finished in January 2023. We plan to include it in a future iteration of this report.

- microprediction runs ongoing time-series prediction challenges. It paid out around $50,000 in 2022. There are 1,000 users in the Slack group and around 500 live autonomous algorithms making predictions.

- crunchdao is a crowd-sourced quant fund, sharing returns across over 2,000 data scientists.

- OpenML is an open platform for sharing datasets, algorithms, and experiments. It hosts over 5,000 datasets and 24 benchmark suites, allowing researchers to review the performance of different algorithms in an open and reproducible way.

- CodaBench is a new platform, currently in beta, which supports CodaLab-style competitions with prizes and deadlines as well as OpenML-style ongoing benchmarks.

- bitgrit is an AI competition and recruiting platform with over 25,000 users, but did not run any eligible competitions in 2022.

- Hugging Face launched its 🤗 Competitions platform in February 2023, shortly before this report was published.

Academia

Most of the prize money for competitions run on the big platforms comes from industry, but competitive machine learning has a rich history in academia — as discussed in Isabelle Guyon’s Invited Talk at NeurIPS this year.

NeurIPS is one of the top global academic machine learning conferences, and has been home to papers introducing the most significant ML advances in the last ten years: AlexNet, GANs, Transformers, and GPT-3.

NeurIPS Competitions

NeurIPS first hosted a Challenges in Machine Learning (CiML) workshop in 2014, and has had a dedicated competition track since 2017. Since then, there has been steady year-on-year growth in both number of competitions and total prize pool - reaching almost $400k in 2022.12 For more information, see Isabelle Guyon and Evelyne Viegas' 2020 talk or 2022 slides on NeurIPS competitions: Evolution and Opportunities.

Other machine learning conferences have also hosted competitions — including CVPR, ICPR, IJCAI, ICRA, ECCV, PCIC, and AutoML.

Prize Money

Distribution

Around half of machine learning competitions we consider have a prize pool over $10k. While there are undoubtedly many interesting competitions without significant prizes (see, for example, Kaggle Community Competitions), for the sake of this report we consider only those with either monetary prizes or academic kudos. Often competitions associated with prestigious academic conferences provide winners with travel grants to allow them to attend the conference.

While some competition platforms do tend, on average, to have larger prize pools than others (see the platform comparison chart), many of the platforms had at least one competition with a very large prize pool in 2022 — the top ten competitions by total prize money include ones run on DrivenData, Kaggle, CodaLab, and AIcrowd.

Funding Sources

Historically, funding for competitions has often come from the following sources:

- Organisations looking for solutions to problems — such as NASA, or the US Bureau of Reclamation

- Organisations looking to hire data scientists — such as American Express, or G-Research.

- Competition platforms or technology providers incentivising data scientists to use their technology — such as Google, or Kaggle funding their own annual Santa competitions.

In 2022, a number of independent competitions were launched with prize money funded by FTX, mostly through the FTX Future Fund regranting program. This included the Trojan Detection Challenge, the Inverse Scaling Prize, the AI Worldview Prize, and the Autocast Competition.

These competitions generally focused on different aspects of AI safety — such as preventing attacks on AI systems, and forecasting how capabilities will scale with model size. This field is relatively new, and had not had much attention within the competitive machine learning space before 2022 (though it could be argued that the 2020 Deepfake Detection Challenge fits within this field).

FTX fallout

Most notably, with a $5m planned prize pool, the AI Worldview Prize was set to be the best funded competition of the year by far. With the collapse of FTX in November 2022, before the competition deadline in December 2022, the status of the competition became unclear. The competition page was hosted on the FTX Future Fund website, which is down as of the time of writing. The FTX Future Fund team resigned in November 2022, and with the ongoing bankruptcy proceedings it seems exceedingly unlikely that any prize money will ever be paid out for this competition. In late November, Open Philanthropy announced that they would be running a version of this competition in 2023, albeit with a smaller prize pool.

Open Philanthropy seem to have also stepped in to fund the Trojan Detection Challenge. The Inverse Scaling Prize organisers have found an alternative source of funding for at least a portion of their prize pool. As for the Autocast Competition, an employee of the Center for AI Safety stated on Reddit that they are now funding the prizes directly themselves.

Participation

Some competitions are much more popular and competitive than others.

Most machine learning competitions allow participants to collaborate on solutions as part of a team, sometimes with a limit on the number of participants per team.

One way to measure the popularity or competitiveness of a competition is to look at the number of teams13 who managed to submit a valid solution to the leaderboard — we’ll call this the number of leaderboard entries.

Leaderboard entries

Academic competitions often have lower participation

Most competitions had at least 50 entries, but there were a few tiny competitions with fewer than ten entries — mostly academic competitions run outside of the main competition platforms. These competitions often have a relatively high barrier to entry, based on the difficulty of the problems tackled. This doesn’t reflect the quality of competition, as they often attract experienced researchers specialising in a niche research area relevant to the competition.

Discoverability is harder for independent competitions

Large competition platforms make it easy for their users to enter new competitions, and can advertise new competitions to their users via their mailing list or home page. Independent competitions — run on purpose-built websites made to host just one competition — don’t have an existing user-base to leverage.

Organisers of these competitions tend to have to rely on social media or more general mailing lists to attract competitors. This discoverability problem was the main driver behind the creation of ML Contests, which provides a directory of ongoing competitions across all platforms, and one place for aspiring competitors to check.

Several Kaggle competitions had more than 1,000 teams competing

At the other end, we find twenty Kaggle competitions with over 1,000 teams entering. Kaggle makes it easy for newcomers to run code in notebooks on their platforms, and to copy basic solutions from other teams. Kaggle competitors are incentivised to share, through the progression system, where users can earn medals when others “like” their code notebooks or discussion posts. This leads to a collaborative atmosphere which makes it very easy for newcomers to create a valid solution — without necessarily creating a solution that could be competitive.

The most popular competition outside of Kaggle was Zindi’s Uganda Air Quality Prediction challenge, with 239 teams entering. Zindi had four other competitions with over two hundred teams on the leaderboard.

Purpose

One way to think of competitive machine learning is as a sandbox for evaluating predictive methods.

The way most competitions work is that competitors calibrate their model on a training set, can measure their progress against a public test set, which defines the public leaderboard, and are ultimately judged against a private test set — which is only revealed at the end of the competition.

A well-run competition has:

- An interesting problem to solve, along with training data.

- A set of highly capable potential participants.

- A mechanism to penalise participants for overfitting (the private test set).

- Sufficient (financial) incentive for competitors to apply real effort to solving the problem.

- A public review of the winning solutions (after the competition ends).

These five characteristics are rarely (if ever!) found together outside competitive machine learning. Academia has some of them, but preventing overfitting is almost impossible, as demonstrated by ongoing replication issues.

Machine learning in industry has many of the five characteristics, except for an incentive to publish solutions which truly give teams an edge in a timely way.

From this point of view, competitive machine learning is the best tool for finding an answer to the question: which predictive methods work best for particular problems?

This is the question we try to answer in the next section.

Winning Solutions

We took two approaches to analysing winners14 of competitions that took place in 2022:

- We tried to capture key differences between teams and solutions by answering key questions15.

Where possible, we got answers by asking the winning team.

Where we weren’t able to do this, we extracted as much as we could from public sources like discussion forums, source code, or the readme on public repositories. - Where source code was made publicly available, we analysed it to extract information about languages and packages used.

We think there’s great value in analysing winning solutions, and that this area does not get the attention it deserves. The best currently available resource is DrivenData’s blog, where they review the results of each of their competitions and publish detailed write-ups by their winning participants.

While Kaggle’s Data Science & Machine Learning Survey provides great insights into the demographics and habits of the larger community, half of the respondents to that survey have less than three years of programming experience, and a quarter have less than a year’s experience16. By restricting our scope to only the most successful competitors, we hope to learn about the habits and tool choices that contribute to that success.

We will start by reviewing general tool choices — programming languages and software packages — and then dive into specific types of competitions, looking at modelling choices in more detail. Finally, we review hardware and compute usage, as well as analysing winning team demographics.

Winning Toolkit

Python is the clear favourite among winners

Python is, almost unanimously, the language of choice among competition winners.17 This is unlikely to come as a huge surprise to anyone in the field, though one might have expected R or Julia to make more of an appearance. Among those who used Python, around half primarily used Jupyter notebooks, and the other half used standard Python scripts.18

Primary Programming Language

The one winning solution primarily using R was… somewhat of an anomaly. Amir Ghazi won Kaggle’s competition to predict the winners of the 2022 US men’s college basketball tournament. He did this by using — apparently verbatim — the code for the winning solution to an equivalent competition in 2018, authored by Kaggle Grandmaster Darius Barušauskas. As crazy as that already is, Darius entered the 2022 competition with a new approach… and came in 593rd place! So much for sharing code.

C++ was the most common secondary language

The one winning solution primarily using C++ was for the ICRA Benchmark Autonomous Robot Navigation Challenge, which requires controlling a Clearpath Jackal robot in real-time. The team first tried using inverse reinforcement learning, but found that classical navigation algorithms worked better. They settled on C++ for their navigation and localisation stack to allow them to perform real-time control at 40Hz. Given the limited resources of the dual-core on-board computer on the robot, this may have been difficult to achieve using Python. Three other teams who primarily used Python also used C++ for some components of their solution — for example, for making tweaks to a drone simulation environment written in C++.

Two teams who primarily used Python for modelling used R for preprocessing — in at least one of the cases, this was because the team member working on the preprocessing pipeline was more familiar with R than Python.

Most of the teams using Python didn’t find the need to use any additional languages beyond shell scripts.

Some competitions restrict language choice

Sometimes competition rules restrict language choice. Some platforms such as Xeek accept only submissions in Python. Some code submission competitions (e.g. those on DrivenData) require use of Python. Some competitions simply exclude commercial languages (contributing to the absence of MATLAB in these results).

The winning solution using Java was for MIT’s Battlecode competition, which requires Java submissions. Some Python was also used: “It’s also common to use Python to automatically generate thousands of lines of hardcoded java for the bot to run the code faster than in a loop (loops are considered quite expensive).”19 Resource constraints mean that this competition tends to be won using sophisticated heuristics-based approaches, rather than, say, deep reinforcement learning.

Python Packages Used by Winners

On reviewing the packages used by winners in their solutions, we were surprised by how much overlap there was between winning solutions. All winners who used Python used the PyData stack to some extent.

We have compiled the most popular packages into three toolkits — one core toolkit, one for NLP, and one for computer vision. Anyone putting these tools at the core of their solution is on a level playing field with the best in the arena.

Competitive Machine Learning Toolkit

PyData

Deep Learning

Gradient Boosted Decision Trees

Hyperparameter Optimisation

Experiment Tracking

Visualisation

NLP Toolkit

NLP

Computer Vision Toolkit

Computer Vision

Deep Learning

The growth of PyTorch has been consistent and steady, but the jump from 2021 to 2022 was significant — with PyTorch going from 77% of winning solutions to 96% of winning solutions.20

No competition for PyTorch

Out of 46 winning solutions using deep learning, 44 used PyTorch as their main modelling package, and only two used TensorFlow. What makes this even more stark is that one of the two competitions won using TensorFlow - Kaggle’s Great Barrier Reef Competition — offered an additional $50k in prizes which could only be won by teams using TensorFlow! The other competition won using TensorFlow used the high-level Keras API.

Deep Learning: PyTorch vs TensorFlow

While there were three instances of winners using pytorch-lightning and one using fastai — both built on top of PyTorch — the vast majority used PyTorch directly.

It feels like, for competitive ML at least… PyTorch has won. This is in line with trends in ML research more broadly, as shown by Papers With Code.21

Notably, we did not find any instances of winning teams using other neural net libraries like JAX (built by Google, and used by DeepMind), PaddlePaddle (developed by Baidu), or MindSpore (developed by Huawei).

Computer Vision

Computer vision competitions encompass many types of tasks:

- Image Classification, such as Kaggle’s Categorising Stroke Types from Digital Pathology Images competition

- Object Detection, like detecting starfish in video footage from the Great Barrier Reef.

- Image Segmentation, like segmenting the stomach and intestines on MRI scans.

- Learning to rank, like finding pictures of individual whales in a database of similar images

Status quo: CNNs

At the core of each of these is the problem of taking image data — usually a two-dimensional array of pixels — and extracting useful information from that. Over a decade ago now, with AlexNet, convolutional neural nets (CNNs) became the state-of-the-art architecture for this type of problem. They are particularly well-suited, since they make use of the hierarchical structure of images, identifying small-scale features and building those up into representations of larger and larger parts of the image.

Enter Transformers

More recently, the introduction of the Vision Transformer and Swin Transformer in 2020/2021 showed that models based on Transformers — a relatively new neural net architecture which has completely taken over language modelling from recurrent neural nets — can also be competitive in computer vision, and potentially perform better than traditional CNN-based models.

As pointed out by Andrej Karpathy (ex Stanford, OpenAI, Tesla, now back at OpenAI) at the end of 2021, it looked like neural net architectures across different areas were all converging on Transformer architectures:

The ongoing consolidation in AI is incredible… [around a decade ago] vision, speech, natural language, reinforcement learning, etc. were completely separate… as of [approximately the] last two years, even the neural net architectures across all areas are starting to look identical - a Transformer.

- Andrej Karpathy, December 2021

CNNs strike back?

But when it comes to computer vision in 2022, the jury is still out. At CVPR 2022, the ConvNext architecture was introduced as “A ConvNet for the 2020s”, and shown to outperform the recent Transformer-based models 22. This was used in at least two competition-winning computer vision solutions, and CNNs in general were still by far the most popular neural net architecture among computer vision competition winners.

Neural Net Architectures for Computer Vision

Pre-trained models are essential

Another way in which computer vision is quite similar to language modelling is the use of pre-trained models: well-understood architectures trained on a public corpus of data, such as ImageNet. The most popular repository for these is the Hugging Face Hub, accessed through the timm library, which makes it trivial to load a pre-trained version of dozens of different computer vision models.

The advantage of using pre-trained models in areas like computer vision and NLP is obvious: real-world images, and human-generated text, all have some shared features — and using a pre-trained model confers the benefit of that general knowledge, similar to having used a much larger, more general set of training data.

Fine-tuning almost always helps

Usually pre-trained models are then fine-tuned — trained further — on task-specific data, such as the data provided by the competition organisers. Usually, but not always! The winners of the Image Matching Challenge used pre-trained models without any fine-tuning at all — “since the quality of the training and test data [varied] in this competition, we did not conduct [fine-tuning] using the provided training data because we thought it would be less effective” — a decision that paid off.

Pre-Trained Model Families for Computer Vision

The most popular type of pre-trained computer vision model among winners in 2022, by far, was EfficientNet, which has the advantage of being, as the name suggests, much less resource-intensive than many other models.

No one augmentation strategy to rule them all

Aside from significant use of pre-trained models, primarily CNNs, there was quite a lot of variety among winning solutions:

- Training-time data augmentation (generating additional training data by transforming existing training data) was common, often making use of the Albumentations library.

- Mixup, another augmentation strategy, was also mentioned by quite a few winners.

- Use of test-time augmentation (performing inference over several, transformed, versions of the input, and using an aggregate prediction) was mixed. Some had good success with it, whereas others found that it did not work as well as alternatives.

NLP

Since their inception in 2017, Transformer-based models have dominated natural language processing (NLP). The Transformer23 is the ‘T’ in BERT and GPT, and is the core neural net architecture underlying ChatGPT.

Transformers continue to dominate

It’s therefore unsurprising that all winning solutions in NLP competitions had Transformer-based models at their core. It’s only slightly less unsurprising that they were all implemented in PyTorch. They all made use of pre-trained models, loaded using Hugging Face’s Transformers library, and almost all of them used versions of Microsoft Research’s DeBERTa model — usually deberta-v3-large.

Transformers continue to eat up compute

Many of these required significant compute resources. For example, the Google AI4Code winner ran an A100 (80GB) GPU for around 10 days to train a single deberta-v3-large for their final solution. This approach was the exception (using a single main model and a fixed train/eval split) — all other solutions making heavy use of ensembles, and almost all using various forms of k-fold cross-validation. For example, the winner of the Jigsaw Toxic Comments competition used a weighted average of the output of 15 models.

Transformer-based ensembles were sometimes combined with LSTMs, or with LightGBM.

There were also at least two instances of pseudo labeling being used effectively in winning solutions.

Tabular Data

One area where deep learning’s dominance is still being contested is in tabular data problems — broadly, any problem where the training data is structured as rows and columns of scalar numerical values. Many “business ML” problems fall into this category.

Modelling

While there are a large number of special-purpose deep learning methods for tabular data, the jury is still out on how these stack up against the previous state of the art in tabular data — gradient boosted decision tree (GBDT) models like XGBoost.

While many of the papers introducing new tabular deep learning methods claim superior performance, these are often followed by review papers showing that this performance does not generalise. For an overview of the literature on this, see A Short Chronology of Deep Learning for Tabular Data.

GBDTs still win at pure tabular data, but neural nets have their place

Pure tabular data competitions, where there is no additional structure to exploit, tend to be won by GBDT models or ensembles of both GBDT and neural net models.

Competitions where tabular data has some underlying structure that is well-suited to specific neural net architectures, such as sequence data to RNNs/Transformers or image-like data to CNNs, tend to be won by neural net models or ensembles of both GBDT and neural net models.

LightGBM is now more popular than XGBoost among winners

XGBoost used to be synonymous with Kaggle. However, LightGBM was the clear favourite GBDT library among winners in 2022 — the number of mentions of LightGBM by winners in their solution write-ups or to our questionnaire was the same as those of CatBoost and XGBoost combined. CatBoost was second, and XGBoost a surprising third.

For more detail, see the competitive machine learning toolkit page.

Dataframes

Pandas has long been the de-facto dataframe library in Python, but it is far from perfect. There has been a proliferation of alternatives in recent years — libraries such as Polars, Dask[dataframe], Modin, Vaex, RAPIDS cuDF, and PyArrow — looking to build on Pandas and replace or extend it.

Pandas reigns supreme

Despite all this, we found 87% of winners using Pandas and… none using any of the alternatives.24

This was surprising! Each of these alternatives have their own niches (scale, speed, distribution), and seem well-established enough to see some use. It’s unclear exactly why this has happened. A few possible reasons:

- The competitive machine learning community often shares code during competitions, with competitors building on others’ solutions. It’s possible that diverging from the commonly used stack of libraries makes it harder to integrate code shared by others.

- Pandas has existed for a long time, and is backed by NumPy arrays. NumPy generally interfaces well with other libraries in the PyData ecosystem, including scikit-learn, which is often used for calculating metrics as well as data preprocessing/transformation.

We will be following the development of this over the next few years, especially with the increasing support for Apache Arrow (which backs Polars) across various projects, including in Pandas 2.0.

Cross-validation

Wily competitors understand the importance of good cross-validation. Broadly, there are three ways of measuring how good your model is:

- On the training data (by training only on part of the data, and using the remainder for validation — often referred to as cross-validation or simply “CV”)

- On the public leaderboard (calculated on public test data)

- On the private leaderboard (calculated on private test data)

The third one is what ultimately matters for winning a competition, but isn’t available until after the competition ends. (see this section for a brief explanation of the different test data sets)

Leaderboard overfitting happens

Novices often focus too much on the second — ‘overfitting to the public leaderboard’, by making lots of submissions with small changes, and considering it a success if they move up the leaderboard. The danger is that the changes in model performance don’t always translate to the private leaderboard, especially if the public dataset is very small25.

One way to measure public-leaderboard overfitting is to see how much competitors’ rankings change between the public and private leaderboards. In the chart below we look at the public leaderboard position of the eventual winner (#1 on the private leaderboard).

Eventual Winner’s Public Leaderboard Score

Public leaderboard winners often end up winning on the private leaderboard…

Largely, public leaderboard rankings seem to have been a good indicator of private leaderboard scores, with the #1 private leaderboard spot going to the #1 public leaderboard team in 43% of competitions, and the private leaderboard winner coming from the top 5 spots on the public leaderboard in over 80% of cases. But there’s a long tail!

…but some competitions have huge jumps from public to private

Some examples of extreme leaderboard jumps from this past year26:

- In Kaggle’s Jigsaw Comment Toxicity Competition, the eventual winner was ranked #1853(!) on the public leaderboard.

- In Kaggle’s Blood Stroke Clot Origin Classification Competition, the winning team was ranked #471 on the public leaderboard.

- In Kaggle’s Great Barrier Reef Competition, the winning team was ranked #121 on the public leaderboard.

Good cross-validation helps prevent leaderboard overfitting

So how do we combat over-reliance on public leaderboard scores? The answer is cross-validation. By training only on part of the training data, and measuring the performance on the remaining data, we can get an idea of how well our model generalises. This doesn’t guarantee that it will generalise well on the test set (that could be drawn from a different distribution!), but it would show if we were overfitting to part of the training set.

Cross-Validation Methods Used by Winners

The two most common ways to approach cross-validation are:

- Fixed evaluation/hold-out set: choose a subset of the training data, and set that aside. For example, randomly taking 20% of the data. Then train on the other 80% of the data, and evaluate your model on the 20% that was “held out”.

- K-fold cross-validation: split the data into k roughly equal partitions, or “folds”. Then train the model k times, excluding one of the folds each time, and evaluating the model’s performance on that fold.

K-fold cross-validation proves most popular despite computational burden

In general the first method is easier and less compute-intensive, but the second makes better use of the available training data, and proved to be the more popular one among winners.

There were many types of k-fold cross-validation used by winners in 2022: standard, stratified, purged, stratified in groups, and multi-label stratified. The winner of the AutoML Decathlon 2022 described their cross-validation process as “online single-fold”.

Ensemble Methods

Competitive machine learning is notorious for its use of ensembles, where many models are combined into one more powerful model. This is one key area where competitive machine learning diverges somewhat from machine learning in production — creating larger ensembles can contribute to marginal improvements in predictive performance, which do not always justify their cost.

While it makes sense to keep adding to a cobbled-together solution used only once to climb up a leaderboard, the additional complexity, reduced maintainability, and loss of interpretability often mean excess ensembling is undesirable in production settings.

Ensemble methods remain popular among winners

Ensemble models continued to be very successful and popular among winners in 2022, in two ways:

- Gradient boosting methods, like GBDTs (LightGBM, XGBoost, CatBoost), all build ensembles of decision trees. These continue to be the tool of choice for tabular data problems, and are often used as components in computer vision and NLP problems. We will refer to these as implicit ensembles, since the ensemble construction is done by the modelling library, with minimal input from the user beyond hyperparameter choices.

- Explicit ensembles (our terminology), where the results of multiple separately trained models are aggregated (usually through a weighted average), were also very popular. We found that over half of winning solutions made use of some sort of explicit ensemble. Sometimes this involved averaging over a GBDT and a Neural Net model (common in tabular data problems), or taking a weighted rank average over several similar NLP models with varying architectures, trained on different subsets of training data. (a form of bagging).

For a good introduction to ensemble methods (and much more!), see the free book An Introduction to Statistical Learning.

Compute and Hardware

We would love to be able to measure the total computing power used by each of the winners in producing their solution — for example, the total number of floating point operations carried out across EDA, initial experiments, hyperparameter tuning, and the final training run. Unfortunately this isn’t really feasible, and so we are settling for some proxy measures.

Firstly, the hardware used.

Hardware Used by Winners

A mix of hardware types were used. As expected, most winners used GPUs for training — this can vastly increase training performance for gradient boosted trees, and is practically a must for deep neural nets.

Quite a few winners had access to clusters provided by their employer or university, usually including GPUs.

No TPUs or Apple Silicon

Somewhat surprisingly, we didn’t find any instances of Google’s Tensor Processing Units (TPUs) being used for training winning models. We also did not see any mentions of winners training models on Apple Silicon, which has been supported by PyTorch since May 2022.

Google Colab can be enough

Google’s cloud notebook solution, Colab was popular — with one winner using the free tier, one using the Pro tier, and one using Pro+ (we were not able to determine the tier used by the fourth winner using Colab).

Local personal hardware was slightly more popular than cloud hardware, though nine winners mentioned the GPU models they used for training without specifying whether they used local or cloud GPUs.

GPU Models Used by Winners

NVIDIA, NVIDIA, NVIDIA

We found mention of 10 different GPU models used by winners for training — all NVIDIA GPUs. While PyTorch added support for AMD’s ROCm platform in 2021, AMD’s GPUs still lag behind in deep learning.

Out of the three main GBDT libraries, LightGBM is the only one that supports AMD GPUs. XGBoost and CatBoost only run on NVIDIA GPUs for now.

High-end GPUs are common…

The most popular GPU was the NVIDIA A100 (we’ve bucketed the A100 40GB and A100 80GB models together, since winners did not always distinguish between the two). Often multiple A100s were used — for example, the winner of Zindi’s Turtle Recall competition used 8 A100 (40GB) GPUs, and two other winners used 4 A100s.

These are considered data-centre products, and, as mentioned below, the cost of renting these GPUs can add up quickly. Buying a single A100 to own outright would set you back over $10,000. The A100’s top spot could be challenged in 2023, as the A100 is now covered by U.S. export restrictions, limiting NVIDIA A100 sales into China and Russia27.

The A6000 is popular too — and a 2x A6000 configuration was used by Kaggle Grandmaster Qishen Ha to win two competitions this year, as part of the desktop computer he was given by HP for being part of their data science ambassador program. The cost of a single A6000 is closer to $5,000.

…but consumer GPUs work too

It’s reassuring that consumer-level GPUs make an appearance — with the RTX 2070, RTX 2080Ti, and RTX 3090 being found in higher-end gaming PCs, and costing $300-$2,000 new, with significant discounts for used models. These are often available at under $1/hour on cloud compute services.

Dataset Size and Training Time

Aside from the specific hardware used, two useful proxies for the amount of computing power required to win at these competitions are dataset size and training time. Both are difficult to measure! It’s very difficult to have a definition of these that’s comparable across solutions and easy for competitors to track.28 In the end, we settled on these two questions:

- What is the total size of the dataset made available to participants, in gigabytes? (as provided)

- Roughly how long did the final training run take?

While these are obviously ambiguous, they are at least somewhat easy to measure, and we hoped that the increased sample size from the ease of measurement would outweigh the lack of precision. For dataset size, we took the size of the data as provided to participants — often uncompressed csv files.

Dataset Size

There was enormous variation in the amount of data made available for competitions, across more than five orders of magnitude. On the lower end, Kaggle’s Patent Phrase Matching competition provided just over 2MB of data, though — importantly — the use of external training data was allowed. On the other end of the scale, DrivenData’s Air Quality competition provided over 2TB of data, AIcrowd’s MineRL Basalt 650GB, and Waymo’s open data used for their four challenges comprises around 400GB of training data plus 40GB each of validation and test data.

Training Time

Training time was also highly variable, likely at least partly because of the ambiguous nature of the question. Where possible we excluded the pre-processing/feature generation time, when that could be skipped in subsequent runs.

Some use free compute, others spend significant amounts

Zindi’s Alvin Transaction Classification challenge was won by a model trained for less than half an hour on a GPU using Google Colab’s free tier. At the other end, the winning solution for Kaggle’s Google AI4Code competition trained for over ten days on an NVIDIA A100 (80GB), on rented cloud compute, likely incurring around $500 in cloud compute costs just for the final training run29, and the Google Universal Image Embedding competition was won by a solution trained for 20 days on a 4 NVIDIA A100 GPUs, which would likely cost over $2,000 if done using cloud compute.

Team Demographics

Many competitions allow a maximum of five participants per team, and teams can be formed by individuals or smaller teams ‘merging’ together by a certain date before the submission deadline.

Some competitions allow larger teams — for example, Waymo’s Open Data Challenges allow up to ten individuals per team.

Winning Team Sizes

Almost half of all winners are solo winners

Impressively, almost half of the winning teams were solo winners. Winning a competition alone is a real feat, as larger teams benefit from splitting up tasks (for example, one person focusing primarily on data preprocessing/input generation), and model ensembles benefit from a diverse set of approaches.

On the other end of the scale, this year’s Waymo 3D Camera-Only Detection challenge was won by a ten-person team.

Another interesting dimension is the winning team’s track record. Some people take part in competitions which are particularly suited to them, or in their specialty area of research. Other people are effectively professional competitors, learning what they need to be able to compete at the highest levels across almost any competition30.

First-time winners are common

Just over half of competitions in 2022 were won by first-time winners — which is encouraging to new entrants! Just under a third were won by what we’re calling serial winners — teams who had already won more than one competition at the time of winning.

Some people win over and over

These serial winners include people like H2O.ai’s Team Hydrogen, who won two Kaggle competitions in 2022, H2O’s Qishen Ha, who also won two Kaggle competitions in 2022, including one solo win, and Stella Kimani, who won three(!) Zindi competitions in 2022 alone.

Repeat Winners

Experience is clearly an advantage when it comes to competitive machine learning. Firstly, certain pieces of work can be re-used across competitions — image preprocessing, calculating metrics, a generic training pipeline, and others.31

Secondly, competitors with a proven track record sometimes receive computing hardware which can give them an edge in future competitions — for example, from Z by HP’s Data Science Ambassadors program, which counts Kaggle Grandmaster Qishen Ha among its members.

We attempted to track winning teams’ professional affiliations, but this proved difficult in many cases. Most notably, we found that members of H2O.ai’s team of Kaggle grandmasters won at least five competitions in 202232, and at least three competitions in 2022 were won by teams with members who work at Preferred Networks.

Conclusion

This was a wide-ranging look at competitive machine learning in 2022. Hopefully, whether you are new to competitive ML or a multiple-times winner, you’ve picked up something useful that you can use in your next machine learning project.

2023 has already got off to a great start with lots of exciting new competitions, and we’re looking forward to publishing more insights when these competitions wrap up.

In the meantime — for a more general introduction to competitive machine learning, read The Kaggle Book. For an introduction to machine learning and statistical inference, read An Introduction to Statistical Learning. For a wealth of information on winning strategies, see the DrivenData blog. To find a competition to take part in, visit our homepage.

About This Report

If you would like to support this research, please check out our sponsors below, subscribe to our mailing list, follow us on Twitter, or join our Discord community.

About Our Sponsors

G-Research is Europe’s leading quantitative finance research firm. We hire the brightest minds in the world to tackle some of the biggest questions in finance. We pair this expertise with machine learning, big data, and some of the most advanced technology available to predict movements in financial markets.

G-Research is currently hiring Machine Learning Researchers, Quantitative Researchers, Quantitative Analysts, and other roles in London (UK).

Genesis Cloud is a European cloud infrastructure provider that specializes in Machine Learning, Rendering, Blockchain, Transcoding, and IT Security-related workloads. Having recognized the importance of sustainability for data center operations, Genesis Cloud was founded in 2018 in Munich to become the first green IaaS cloud provider.

The company operates fully green data centers, powered by 100% renewable energy sources and makes Accelerated Cloud Computing more efficient, accessible, and sustainable.

Set up your first instance now with $50 of free compute credits.

About ML Contests

For over three years now, ML Contests has provided a competition directory and shared insights on trends in the competitive machine learning space. To receive occasional updates with key insights like this report, subscribe to our mailing list.

Methodology

Data was gathered from correspondence with competition platforms, organisers, and winners, as well as from public online discussions.

In the Winning Solutions section of the report, we restrict ourselves to measurable competitions, with pre-defined, objective, numerical metrics determining the leaderboard ranking — excluding analytics competitions like DrivenData’s PETs Challenges, Kaggle’s NFL Big Data Bowl, Stanford’s AI Audit Challenge, and Zindi’s Text Cleaning and Augmentation Competition.

We were able to gather information on winning solutions for 67 of these competitions. In 26 of the 67, the answers came from the winners completing a questionnaire we sent them. The set of competitions for which we have some information on the winning solution covers over $2.2m of the total prize pool available in 2022. For 43 competitions, we also found the winning solution’s source code, and for 40 of those we managed to get the list of Python packages that were imported.

Sometimes, even though we weren’t able to gather information on the methods used by the winners, we were able to gather other information:

- Leaderboard entries contains information for 104 competitions in 2022.

- Eventual winner’s public leaderboard score contains data for 67 competitions in 2022.

- Winning team sizes contains data for 99 competitions in 2022.

- Repeat winners contains data for 78 competitions in 202233.

In Competitive Machine Learning Toolkit, the percentages given are as a proportion of the 40 solutions for which we were able to find Python package data.

Acknowledgements

Thank you to Jackee Brown, Peter Carlens, and Eniola Olaleye for help with data gathering.

To Adrien Pavao and Fritz Cremer for sharing insights into the broader competitive machine learning ecosystem, and tabular data competitions on Kaggle.

To the teams at Zindi (Amy Bray, Agnes Wanjiru, Paul Kennedy, Delilah Gesicho), DrivenData (Isaac Slavitt, Greg Lipstein), AIcrowd (Sneha Nanavati), EvalAI (Rishabh Jain), Waymo (Scott Ettinger), and Xeek (Nate Suurmeyer, Mack Starnes) for providing data on their platform’s competitions and reaching out to their winning teams.

Thank you to the organisers of the Battlecode, Weather4Cast, ORBIT, Panoptic Scene Graph Generation, Vehicle Routing, Real Robot, Cross-Domain MetaDL, SeasonDepth Prediction, Reconnaissance Blind Chess, Sensorium, Meta-learning from Learning Curves, DodgeDrone, BARN, and ODOR competitions, for providing information on their competitions and the winning teams.

Thank you to the competition winners who took the time to answer our questions by email or questionnaire: Adeyinka Michael Sotunde, Pham Vu Hung, Stephen Kolesh, Plato, Muhamed Tuo, daishu, Haresh Karnan, Isaac Liao, Yvo Keuter, Shaoshuai Shi, Li Jiang, Dengxin Dai, Bernt Schiele, Petr Šimánek, Jiayuan Gu, Anvay Shah, Shivaram Kalyanakrishnan, Adrian Hoffmann, Yide Huang, Qiang Wang, Christian Simon, KunHao Yeh, Zhirui Zhou, Jinyu He, Lei Zhang, Xiawu Zheng, TrueFit, Li Gu, Patrick Sean Klein, Kai Jungel, Leo Baty, and others who preferred not to be named.

Thank you to both of our sponsors for putting their trust in us and sponsoring the first of our State of Competitive Machine Learning reports: G-Research, and Genesis Cloud, who, in addition to sponsoring, generously offered $100 in compute credits to all winners who completed our questionnaire.

Lastly, thank you to the maintainers of the open source projects we made use of in conducting our research and producing this page: Hugo, Tailwind CSS, Chart.js, Linguist, pipreqs, and nbconvert.

Attribution

For attribution in academic contexts, please cite this work as

Carlens, H, “State of Competitive Machine Learning in 2022”, ML Contests Research, 2023.

BibTeX citation

@article{

carlens2023state,

author = {Carlens, Harald},

title = {State of Competitive Machine Learning in 2022},

journal = {ML Contests Research},

year = {2023},

note = {https://mlcontests.com/state-of-competitive-machine-learning-2022},

}

-

At this stage of the report, we also include competitions that might be better described as “competitive data science”, which involve analysing data and presenting insights, rather than building models to beat a specific benchmark. The Winning Solutions section of the report focuses solely on model-building/predictive competitions. ↩︎

-

Roughly: the number of people/teams that tend to enter each competition. Where we are able to get this data in a structured form, we calculate the median number of valid leaderboard entries over all competitions on a given platform. Rounded to the nearest 10. Where we weren’t able to get the data in a structured form, we sampled a few competitions by clicking through the competition pages. ↩︎

-

Due to language difficulties and a lack of familiarity with the platform, we’ve been unable to verify the state of Tianchi, Signate, and DS Works competitions to the same extent as the other platforms. It seems possible that there might be some duplicate or inactive competitions, and we have reached out to the Tianchi team to clarify but have not received any response yet. We plan to update these figures if we receive any future clarifications. For the same reason, we have possibly excluded other platforms which are not English-first. We are open to including these platforms in future publications if the data can be provided to us. ↩︎

-

Since CodaLab allows anyone to register and host a new competition, and prizes are not always represented in a structured way, it is difficult for us to verify how many competitions there were which met our criteria. According to CodaLab’s wiki, around 200 competitions were run in this period. However, this includes low-stakes competitions such as those run as exercises for educational courses, as well as some duplicates. The competitions and prize figures we have listed here are for competitions where we are confident that they are unique and represent genuine prizes. ↩︎

-

AIcrowd initially started as a research project at EPFL, then known as crowdAI.org. ↩︎

-

While we weren’t able to verify with the Signate team when the site was founded, the first competition listed on the platform took place in 2014. ↩︎

-

We received competitions data from EvalAI close to publishing time and were unable to review it fully before publishing. As there is no structured way for us to check the prize money awarded for each competition, it’s not easy to verify which of the many competitions meet all our criteria. Because of this, we relied on a shortlist of competitions provided to us by the EvalAI team, alongside EvalAI competitions that we had previously listed on our site. Because of this, it’s likely that the numbers quoted here for EvalAI are lower than in reality. For context, we were told by the EvalAI team that they have hosted over 270 challenges since inception, from over 40 organisations from academia and industry. ↩︎

-

Anyone with a Google account can enter the Waymo Challenges. ↩︎

-

“SberCloud… has created a Data Science Works (DS Works) platform… announced by David Rafalovsky… during the international conference AI Journey 2021.” MarketScreener ↩︎

-

Competitions organised outside the main competitive machine learning platforms sometimes take place on special purpose sites — for example, MIT’s Battlecode competition, or IARAI’s Traffic4Cast competition. Alternatively, some competitions are organised on generalist competition platforms with a broader focus than data science/ML, such as Topcoder. ↩︎

-

In order to include only competitions likely to be of interest, we restrict ourselves to competitions which either have a prize pool of at least $1k, or are linked to a prestigious academic conference. Though we use both the terms data science and machine learning, we don’t mean to impose any restrictions on the methods used in competitions. By “machine learning competition”, we generally mean any competition which meets the Common Task Framework definition (Donoho, 2017: “50 Years of Data Science”) — i.e. a public training set, a set of competitors, and a scoring referee). We exclude any competitions without fixed deadlines. For the sake of this report, we restrict ourselves further to competitions with a deadline in 2022. The general inclusion criteria are explained in more detail on our submission page. ↩︎

-

The data for NeurIPS competitions and prizes was gathered by going to each of the competition sites listed on the NeurIPS Competition Track web page. We were able to verify the annual number of competitions against this presentation from NeurIPS 2022, but were unable to find a source for the total annual prize pool to compare against. It is likely that our estimates of total annual prize pool are slightly lower than the actual numbers, since some competition sites are no longer accessible. We counted only cash prizes, excluding any cloud compute credits or travel grants. ↩︎

-

In our definition of “team”, we’re also including one-person teams. The number of teams doesn’t necessarily reflect the total number of people who participated, but should reflect the number of independent entities competing to win. ↩︎

-

We define “winning” as finishing in the #1 position on the final, private leaderboard. There is certainly an argument to be made that multiple top solutions count as relevant winners — for example, the top 5 on the final leaderboard, or all those who earned a gold medal in a Kaggle competition. This is especially true in competitions where the performance difference between leading solutions is minimal. We are open to doing this analysis in future, but due to resource constraints we had to restrict ourselves to #1 solutions for this report. ↩︎

-

Our questionnaire for winners had a total of 28 questions, including “What was the primary modelling technique used?”, “Which part of your solution do you think was most important in beating other teams?”, and “What kind of cross-validation method did you use?” ↩︎

-

It’s easy to see this by looking at the histogram for Q11 - “For how many years have you been writing code and/or programming?” - in the survey responses dataset. ↩︎

-

Of course, this does not mean that Python is in any way the “best” language for solving machine learning problems, or the only one. As evidenced by the packages used, winning competitors often use Python as an interface to tools written in other languages such as C, C++, or Fortran. ↩︎

-

This data will be biased towards notebooks, since some challenges require code submission in notebook format. ↩︎

-

Private communication with Isaac Liao, aka wololo, winner of Battlecode 2022. ↩︎

-

For more detail on previous years, see our 2020 and 2021 reviews. Note that these were done with a much smaller research budget, which meant we weren’t able to track down the winning solutions for quite as many competitions, so there will be more noise. ↩︎

-

For a look at how PyTorch managed to capture market share from TensorFlow in the research community, see Horace He’s The State of Machine Learning Frameworks in 2019. ↩︎

-

For an exploration of competing computer vision architectures, see Beyond Convolutional Neural Networks (CVPR 2022). ↩︎

-

For an introduction to the Transformer architecture, see The Illustrated Transformer. ↩︎

-

We found two instances where Dask was listed as a requirement, but no use of a Dask dataframe. ↩︎

-

For an example of this, see Greg Park’s blog ↩︎

-

For a more general look at this phenomenon, considering more than just the ultimate #1, see this kaggle notebook which computes a “shake-up” metric based on public-private leaderboard movement on Kaggle competitions. ↩︎

-

“On August 26, 2022, the U.S. government, or USG, informed us that it has imposed a new license requirement, effective immediately, for any future export to China (including Hong Kong) and Russia of our A100 and forthcoming H100 integrated circuits. “ — NVIDIA Form 8-K, filed with the SEC on 31 August 2022. ↩︎

-

For example, do we care about clock-hours or CPU-hours, and if so, how do we compare someone who trained a model on a CPU to someone who trained it on a GPU? Are we measuring raw data, in the form in which it’s provided, or maximally compressed, in the most efficient storage format? Most importantly, we wanted to make the best use of the winners and competition organisers’ limited time. ↩︎

-

Looking at the 9 providers for A100 (80GB) GPUs listed on cloud-gpus.com at the time of writing, the median price is $528/h, with the range being $300-$1228.8/h. (Disclaimer: cloud-gpus.com is run by the same team as mlcontests.com) ↩︎

-

Some experienced Kagglers have written about their approach and experiences. For example, see Philipp Singer’s website or Christof Henkel’s blog. Philipp, AKA Psi, is currently ranked #1 on Kaggle, and Christof (AKA Dieter) has previously been ranked #1. ↩︎

-

"…since I already had a good code base from previous computer vision competitions I could focus on solving the actual problem" — Christof Henkel’s blog. ↩︎

-

“Some of the repeat winners were already renowned Kaggle Grandmasters when they joined H2O, and some of the others became Kaggle Grandmasters as part of H2O. Irrespective of that, H2O truly supports participation in Kaggle and other competitions and also provides resources. Participating in competitions helps the company stay up-to-date with the latest happenings in the field of ML… Our Kaggle Grandmasters have a deep understanding of various machine learning techniques and algorithms… By digitizing their thinking and incorporating it into our software, we enable users to leverage their expertise and benefit from their knowledge.” (Parul Pandey, Principal Data Scientist at H2O.ai, private communication, edited for brevity) ↩︎

-

We determine a team to have previously won if any of the team’s members have previously come first in any competitions. While we think this is a reasonable approach, there are some shortcomings. Since competitors’ contact details and real names are not always public, we don’t have a foolproof way to cross-reference participants across platforms, and it’s likely that we’ve over-estimated the number of first-time winners somewhat. Secondly, having come first is quite a high bar, and not always a good proxy for experience competitors. For example, at the time of winning Kaggle’s Multimodal Single-Cell Integration competition, Shuji Suzuki was already a Kaggle Competitions Grandmaster with 5 gold medals, but had not come first in any competitions. Unfortunately other platforms don’t always have an equivalent to Kaggle’s competition medals, so restricting to first place positions seems like the most reasonable comparable metric. ↩︎